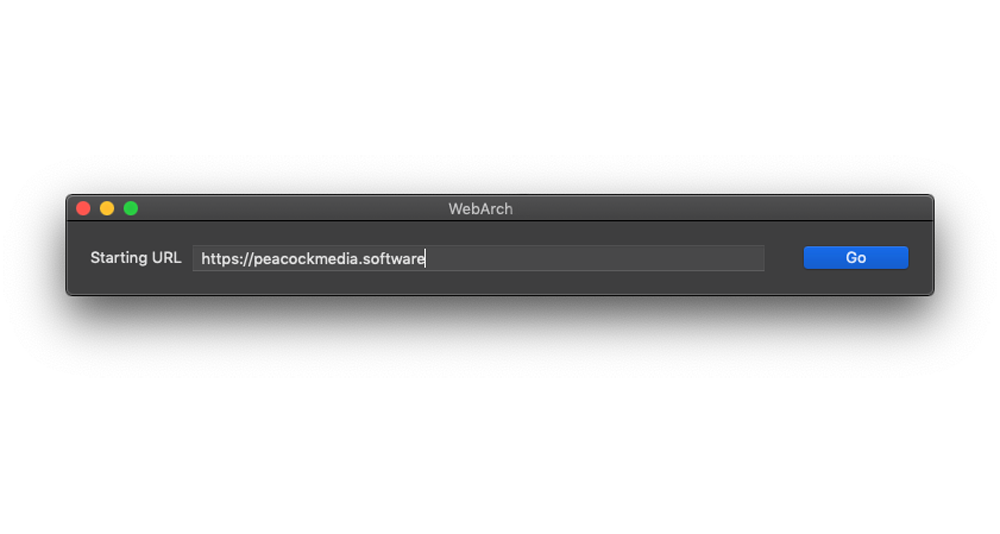

- Crawl a website, save all files locally. - Very simple interface - simply enter your page url and press start. When the crawl finishes, you'll see a save dialog. - Powerful crawl settings, allowing for rate limiting, black / white listing, setting of user-agent string (spoofing) and more. - Runs locally, not a cloud service. Own your own data. - Options to preserve all files exactly as they were fetched under their original filenames - Or to process them to allow browsing of the local copy